Prepare a libvirt KVM/QEMU server for PoS validators

In this document we will teach you how to prepare a libvirt KVM/QEMU server for Proof of Stake validators.

Physical servers are needed when a serious Proof of Stake project is going to be accomplished. Dedicated servers infrastructure, with all single points of failure covered, and with all security measures taken into account are among the requirements to satisfied. A second, but not less important aspect is the easiness and cost of maintenance.

Some time ago, virtualization of machines where a handicap and a waste of resources. Nowadays, the performance of the libvirt KVM/QEMU systems are closed to 99%. Meaning that almost all hardware power can be presented to a virtual machine running over a given physical host. The inclusion of specifics CPU instructions for virtualization also allowed kernel level process to gain more and more efficiency.

KVM is the Linux kernel’s own answer to virtualization. As a result, it’s the lightest, most stable, and most universal virtualization option for Linux systems. While, KVM isn’t as simple to set up as packaged solutions like VirtualBox, it’s ultimately more efficient and flexible.

Big cloud computing enterprises base their bussines core on renting libvirt KVM/QEMU machines under a nice web GUI platform.

What if we build our own virtualization system?

We have had the experience of using 3rd parties virtualmachines. Normally small machines with 2–4 CPU, and around same RAM. Our requirements were clear from the beginning:

Hosting virtual machines in our own failure-proof environment.

Hight availability

Easy to clone, scale, move…

Security

So we decided to buy some hardware…

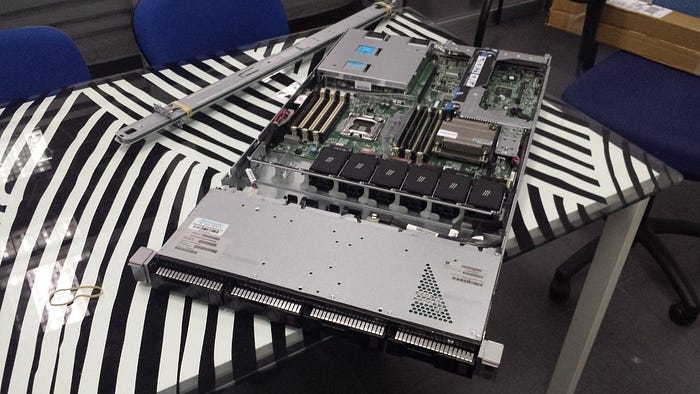

This is one of the machines we are going to use in the Game Of Stakes:

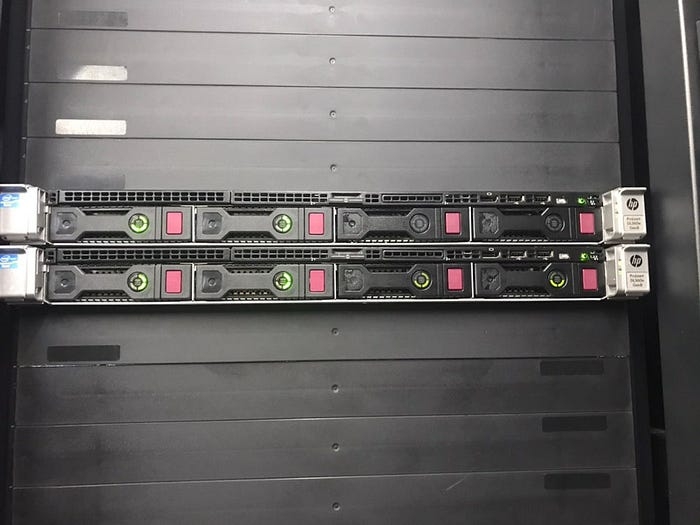

As an starting point we prepared a couple of small 1U proliant HP. 16Gb RAM ECC, 8 cores and 16 threads CPU. The storage solution was based on LVM-Cache which offered at the same time speed and space needed. Thus we counted with 2TB spinning disks lvm-cached with 500Gb SSD ones. LVM cache volumes are managed the same way we manage normal lvm groups and logical volumes.

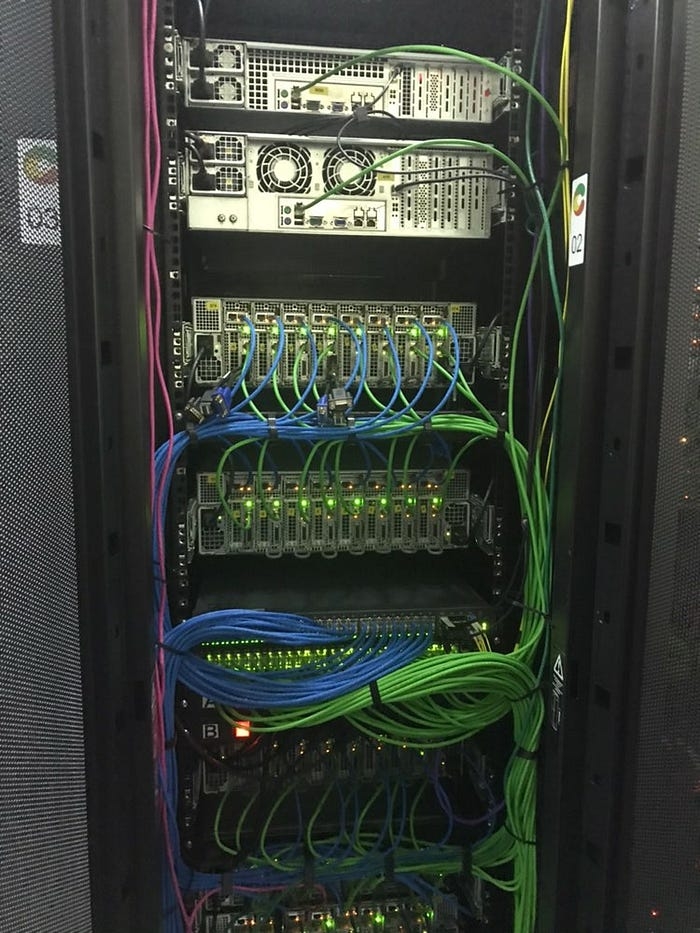

Once in the housing facilities we could placed them in a almost un-used rack. It was nice to see the extremely care showed by the personal. You can lay assured that your machines are in good hands when you see a back side of a rack looking like this one:

We were also surprised by the security measures of the company. Cameras, movement sensors, biometric check access, power and cooling redundance, anti-fire circuit, 5 connections fibers…

Operating system

The host is based on Centos 7 minimal install. The virtualization system is installed in a headless mode:

# yum install qemu-kvm libvirt libvirt-python libguestfs-tools virt-install

By default kvm creates a privated network segment (192.168.122.0/24) and virtual machines running on the host will be masquerading in order to have outside connection. This solution is enough if you have just one public IP in your host and you would like to run more than one virtual machine at the same time. We are participating in several testnets with this setup. The key here is the forwarding of some ports from the host to your virtual machines.

On the other hand if you would like to avoid NAT and use a public IP on each of your virtual machines the best solution is a bridge on each of your physical NICs. KVM will provide your virtual machines with a transparent virtual bridge and your public IP can be configured directly in your guest NIC.

Regarding firewalls we deployed 2 levels. One in the host, blocking everything but the needed ports which we need to forward and a second level in each virtual machine. The anti-DDoS protection is implemented at both levels. Same as kernel tuning parameters.

Last updated